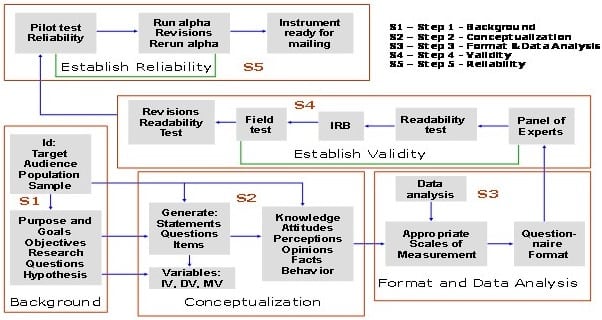

Development of a valid and reliable tool in the quantitative study involves several steps taking considerable time. This article describes the sequential steps involved in the development and testing of the instrument used for data collection.

Tool Development in Research: A Quantitative Approach

Every step in the process relies on carefully adjusting and evaluating the previous steps, ensuring that each one is fully completed before moving on to the next.

Step 1 – Understanding Background

In this initial step, the purpose, objectives, research questions, and hypothesis of the proposed research are examined. Determining who the audience is, their background, and their educational/readability levels, access, and the process used to select the respondents (sample vs. population) are also part of this step. A thorough understanding of the problem through literature searches and readings is a must. Good preparation and understanding of Step 1 provide the foundation for initiating Step 2.

Step 2 – Conceptualization

After developing a thorough understanding of the research, the next step is to generate statements/questions for the instrument. In this step, content (from the literature or theoretical framework) is transformed into statements/questions. In addition, a link between the objectives of the study and their translation into content is established.

For example, the researcher must indicate what the instrument is measuring, that is, knowledge, attitudes, perceptions, opinions, recalling facts, behavior change, etc. Major variables (independent, dependent, and moderator variables) are identified and defined in this step.

Step 3 – Format and Data Analysis

In Step 3, the focus is on writing statements/questions, selection of appropriate scales of measurement, instrument layout, format, question order, font size, front and back cover, and proposed data analysis. However, the order of questions in a questionnaire is important as it affects the quality of information.

There are two categories to order questions. The first is that the questions should be asked in a random order, and the second is that they should follow a logical progression based on the objectives of the study. Scales are tools that help measure a person’s reaction to a specific factor. Understanding the relationship between the level of measurement and the appropriateness of data analysis is important.

For example, if ANOVA (analysis of variance) is one mode of data analysis, the independent variable must be measured on a nominal scale with two or more levels (yes, no, not sure), and the dependent variable must be measured on an interval/ratio scale (strongly agree to strongly disagree).

Step 4 – Establishing Validity

As a result of Steps 1-3, a draft instrument is ready for establishing validity. Validity is the amount of systematic or built-in error in measurement. Validity is established using a panel of experts and a field test. Which type of validity (content, construct, criterion, and face) to use depends on the objectives of the study. The following questions are addressed in Step 4:

- Is the questionnaire considered valid? In other words, does the questionnaire measure what it intended to measure?

- Does it represent the content?

- Is it appropriate for the sample/population?

- Is the instrument comprehensive enough to collect all the information needed to address the purpose and goals of the study?

Addressing these questions coupled with carrying out a readability test enhances instrument validity. Approval from the Institutional Review Board (IRB) must also be obtained. Following IRB approval, the next step is to conduct a field test using subjects not included in the sample. Make changes, as appropriate, based on both a field test and expert opinion. Now the questionnaire is ready for the pilot test.

Step 5 – Establishing Reliability

In this final step, the reliability of the instrument is assessed using a pilot test. Reliability refers to random error in measurement. Reliability indicates the accuracy or precision of the measuring instrument. The pilot test seeks to answer the question, does the instrument consistently measure whatever it measures?

The use of reliability types (test-retest, split half, alternate form, internal consistency) depends on the nature of the data (nominal, ordinal, interval/ratio). For example, internal consistency is appropriate for assessing the reliability of questions measured on an interval/ratio scale. To assess the reliability of knowledge questions, test-retest or split-half is appropriate.

Reliability is established using a pilot test, by collecting data from 20-30 subjects not included in the sample. Data collected from the pilot test is analyzed using SPSS (Statistical Package for Social Sciences) or another software. SPSS provides two key pieces of information. These are the “correlation matrix” and “view alpha if item deleted” columns. Make sure that items/statements that have 0s, 1s, and negatives are eliminated. Then, view the “alpha if item deleted” column to determine if alpha can be raised by deletion of items.

Delete items that substantially improve reliability. To preserve content, delete no more than 20% of the items. The reliability coefficient (alpha) can range from 0 to 1, with 0 representing an instrument full of error and 1 representing a total absence of error. A reliability coefficient (alpha) of .70 or higher is considered acceptable reliability.

Avoiding Instrumentation Bias

Researchers can take steps to reduce bias in their surveys or questionnaires and ensure the data collected is accurate and unbiased. Here are some guidelines and principles for tool development in research that help to avoid biases in research instrumentation, particularly for surveys or questionnaires:

Use Simple Language

It is one of the principles of tool development in research that you should use simple and clear language, avoiding complex terminology or vocabulary. It is crucial to ensure that participants comprehend the questions and provide accurate responses.

Avoid Leading Questions

It is important in tool development in research that you should avoid asking leading questions that may influence the participant’s response. Leading questions bound the respondents to a specific answer; for example, instead of inquiring, “Do you believe education is necessary?” Inquire “how important is education to you?”

Avoid Loaded Questions

It is also important in tool development in research that you should avoid loaded question. These questions manipulate the emotions of respondents and are based on the assumptions that force them to be defensive. For example, “how can you support an organization that exploit the child labor?” Rather you should ask “what opinion do you have about the organization that employ children as labor?

Use Different Questions

It is essential in the process of tool development in research that you should utilize a range of question types, including multiple-choice, Likert scale, and open-ended questions, in order to minimize any potential bias in responses. This will help create an environment where participants feel comfort and supported to freely share their opinions and thoughts.

Randomize Question Order

It is important in the process of tool development in research to randomize the order of questions in order to prevent any potential order effects or bias that may arise from the placement of the questions. By implementing this approach, it is ensured that the responses remain unbiased and unaffected by the sequence in which the questions are asked.

Avoid Social Desirable Responses

Participants may sometimes respond in a manner that aligns with societal expectations or what is considered desirable, rather than expressing their genuine opinions or experiences. This phenomenon is known as social desirability bias. To address this concern, it is important for tool development in research process to provide participants with reassurance that their responses will be kept confidential and anonymous. Additionally, it is advisable to avoid asking questions that may lead to socially desirable responses.

Pilot-testing

It is important to pilot-test the survey or questionnaire with a small sample of participants in order to identify any potential issues or areas of bias. This step is necessary regardless of whether the survey is based on previously published scholarly publications or developed by the researcher. This will enable the researcher to make any required changes before distributing the survey or questionnaire to a larger group.

Consider Linguistic Differences

It is essential in the process of tool development in research to take into account cultural or linguistic differences. Ensure that the questions are suitable and pertinent for the population under investigation, and if needed, translate the survey or questionnaire into the native language of the respondents.

Monitor Biases

It is important to consistently monitor for bias at every stage of the instrument’s planning, construction, and validation.

Conclusions

Systematic development of the questionnaire for data collection is essential to reduce measurement errors, such as questionnaire content, questionnaire design and format, and respondents. Developing a thoughtful understanding of the material and converting it into well-constructed questions is crucial for reducing any potential inaccuracies in measurement.

Having a keen eye for detail and a deep understanding of the tool development in research process is incredibly valuable for the development of educators, graduate students, and faculty members. Not following appropriate and systematic procedures during tool development in research, testing, and evaluation may undermine the quality and utilization of data. Anyone involved in educational and evaluation research must follow these five steps to establish a valid and reliable questionnaire to enhance the quality of research.